A Unified Operating Model for Cloud, Data, and AI

Gain real-time visibility into AI performance, cost, quality, and reliability, across every pipeline, model, agent, and cloud environment.

AWS Partner Network

Enterprise Security & Compliance

Trusted by Engineering Leaders

Modernizing AI, data, and cloud foundations for regulated industries

The hardest part of AI isn’t the models, it’s everything around them. Most organizations still lack a unified way to measure performance, track cost, govern data, and move workloads into production... We fix that

Transform AI Exploration Into Measurable Business Impact

We combine engineering expertise with platform-powered delivery to turn your AI investment into a competitive advantage

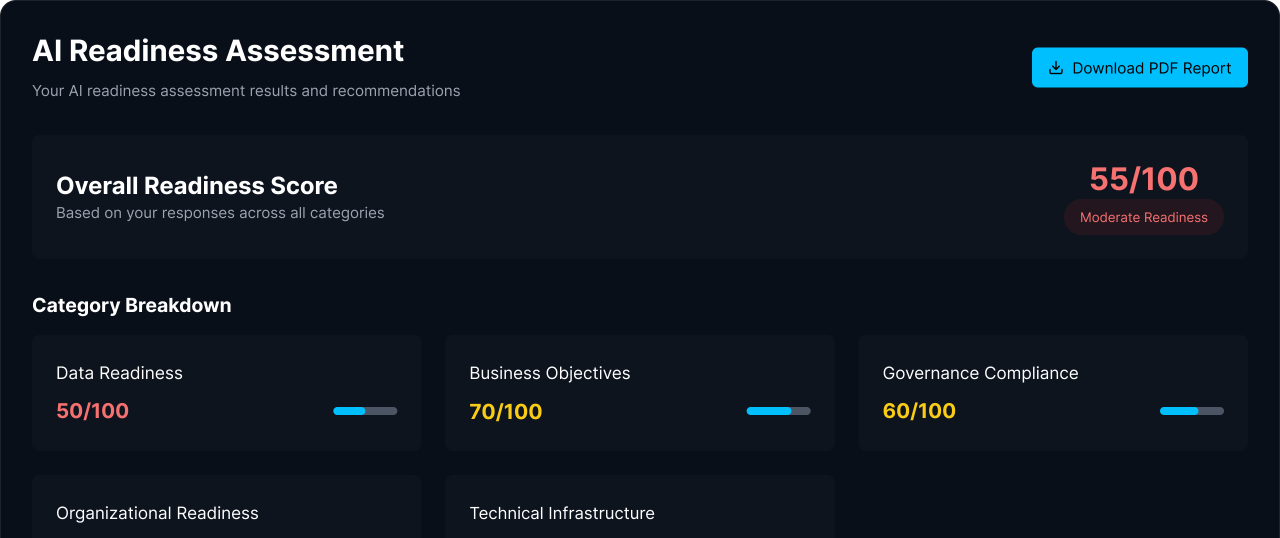

Assessment

Available Today

- Diagnostic for cloud, data, and AI

- Maturity scoring

- Roadmap generation

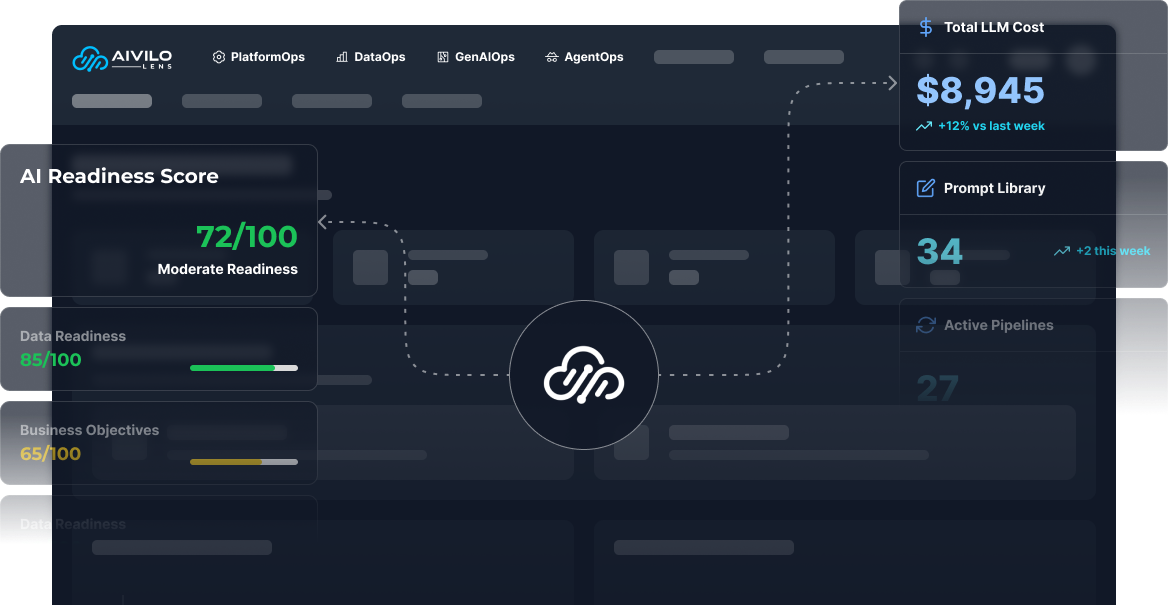

Platform

Lens (Early Access)

- Unified visibility

- Governance and maturity

- Operational backbone for all services

Services

Professional + Managed

- Engineering execution

- Production-grade operations

- Continuous improvement

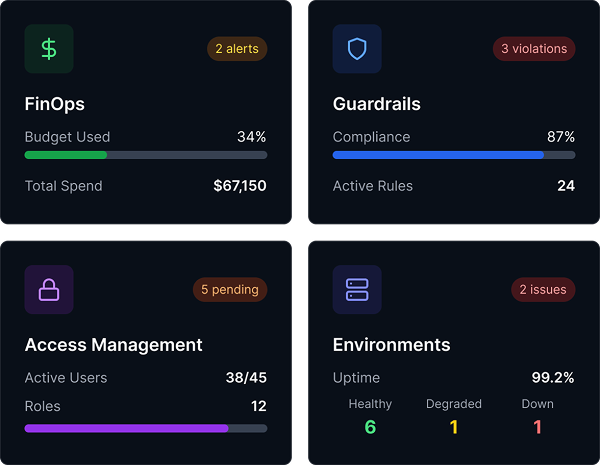

AI Requires Solid Cloud Foundations

See how AI workloads impact your cloud environment and spend. Lens provides the visibility and recommendations needed to keep infrastructure efficient, governed, and scalable.

Understand how every AI project impacts spend

Built-in FinOps components for attribution and forecasting

Guardrails for security, reliability, and cost control

Reliable Data for AI at Scale

Bridge the gap between raw data and production-grade AI by combining data pipelines, governance frameworks, and observability practices into one streamlined operating model.

Governance and observability ensure reliable insights

Built-in compliance and PII protection safeguard sensitive data

Unified data foundation improves collaboration

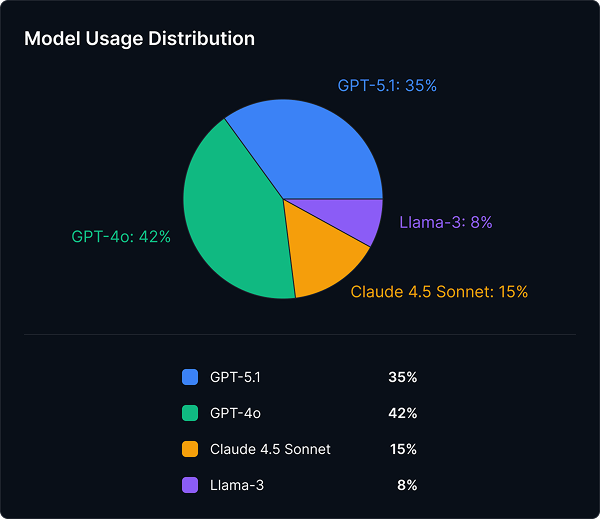

Deep Insight Into How Your AI Applications Behave

GenAIOps provides trace-level and session-level visibility for GenAI applications, helping teams understand model behavior, token consumption, latency, and overall performance.

Track model behavior with trace-level and session-level monitoring

Experiment with prompts using a built-in Prompt Catalogue

See how datasets are used across apps and embedding workflows

Full Observability and Governance for Agentic Workflows

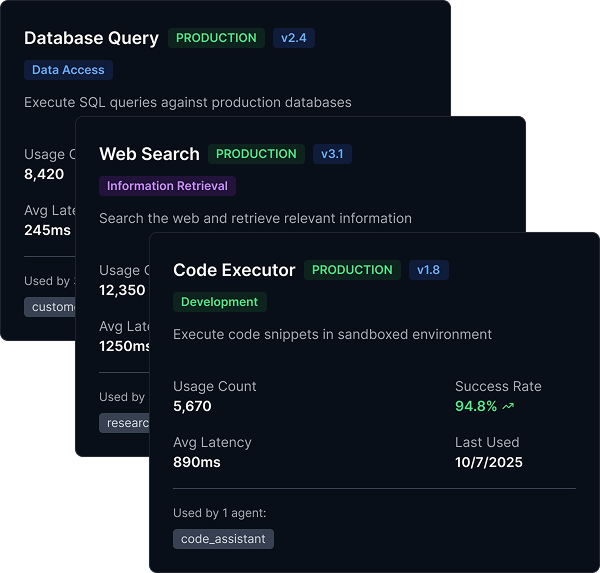

AgentOps helps engineering teams understand how agents think, what tools they call, where they fail, and how to keep them reliable and safe.

Trace-level visibility into agent steps, tool calls, and performance

Guardrails and content filtering to ensure safe, governed agent behavior

Automatic detection of failures, timeouts, and hallucinations

Get Your AI Readiness Assessment

Reveal visibility gaps, cost drivers, production blockers, and readiness risks, all mapped to a prioritized roadmap.